Building a data warehouse

Automated data quality checks using SQL

Only clean and QA approved data for your data warehouse

If you are building a data warehouse solution or/and performing some admin tasks around databases then this article is for you. It answers the question how to test data quality and send email notifications in case something is not quite right there. Ideally every data user would like to be notified on any anomalies in data especially when we talk about crucial reporting components and fields.

Every data warehouse might have dozens of data pipeline feeds where it comes from APIs, connected databases, data streams like Kinesis or Kafka and third party data providers. I previously wrote how connect all these with easy and you can read about my setup here: How to Handle Data Loading in BigQuery with Serverless Ingest Manager and Node.js

So when the data come in you want to make sure all the fields are in place and everything is ready to use. For example, your reports might be using date column and you want to ensure it lands in your data warehouse correctly formatted or at least not being empty (nonNull).

Here is a simple, yet effective framework for this.

Prerequisites, Libraries and setup

- Google BigQuery. I will use BigQuery as a data warehouse solution because it's free but you can use any alternative you like, i.e. Snowflake, Redshift, any db engine. Most of them can send email notifications anyways. And this is all what it is about.

- Standard SQL. This is not an advanced tutorial. SQL provided here will work in any database engine or you will be able to adjust it to meet your requirements. Easily.

IMPORTANT: BigQuery is not entirely free but Google provides free credits and even if you don't have any left I will use data which won't cost you anything to query. All you need is Google account and BigQuery API enabled.

You will learn how to:

- Check data quality using SQL

- Send email notifications

- Create test tables and mock data with SQL

- Detect data anomalies

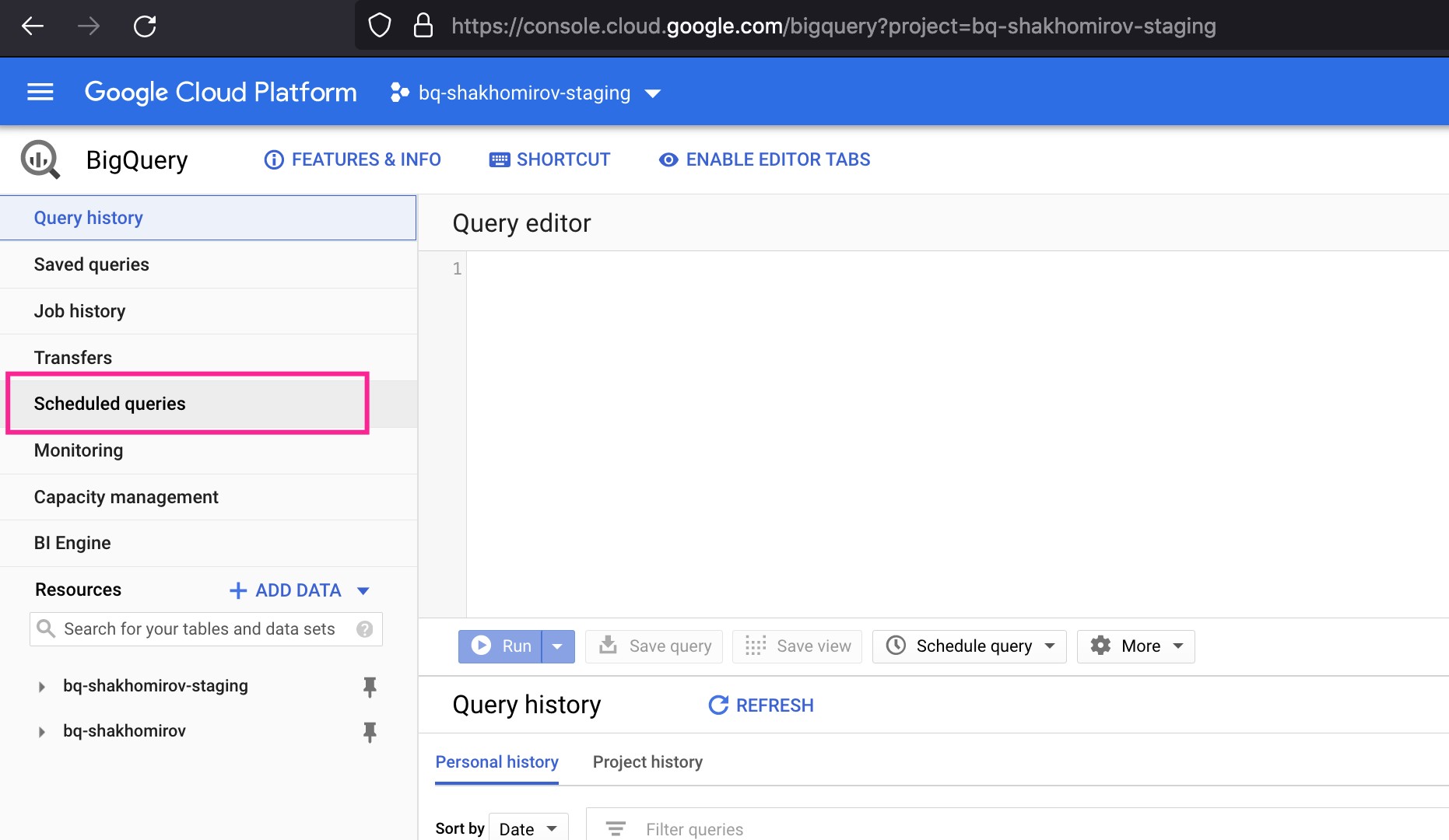

How to schedule email notifications

In BigQuery it is very simple.

Go to scheduled queries:

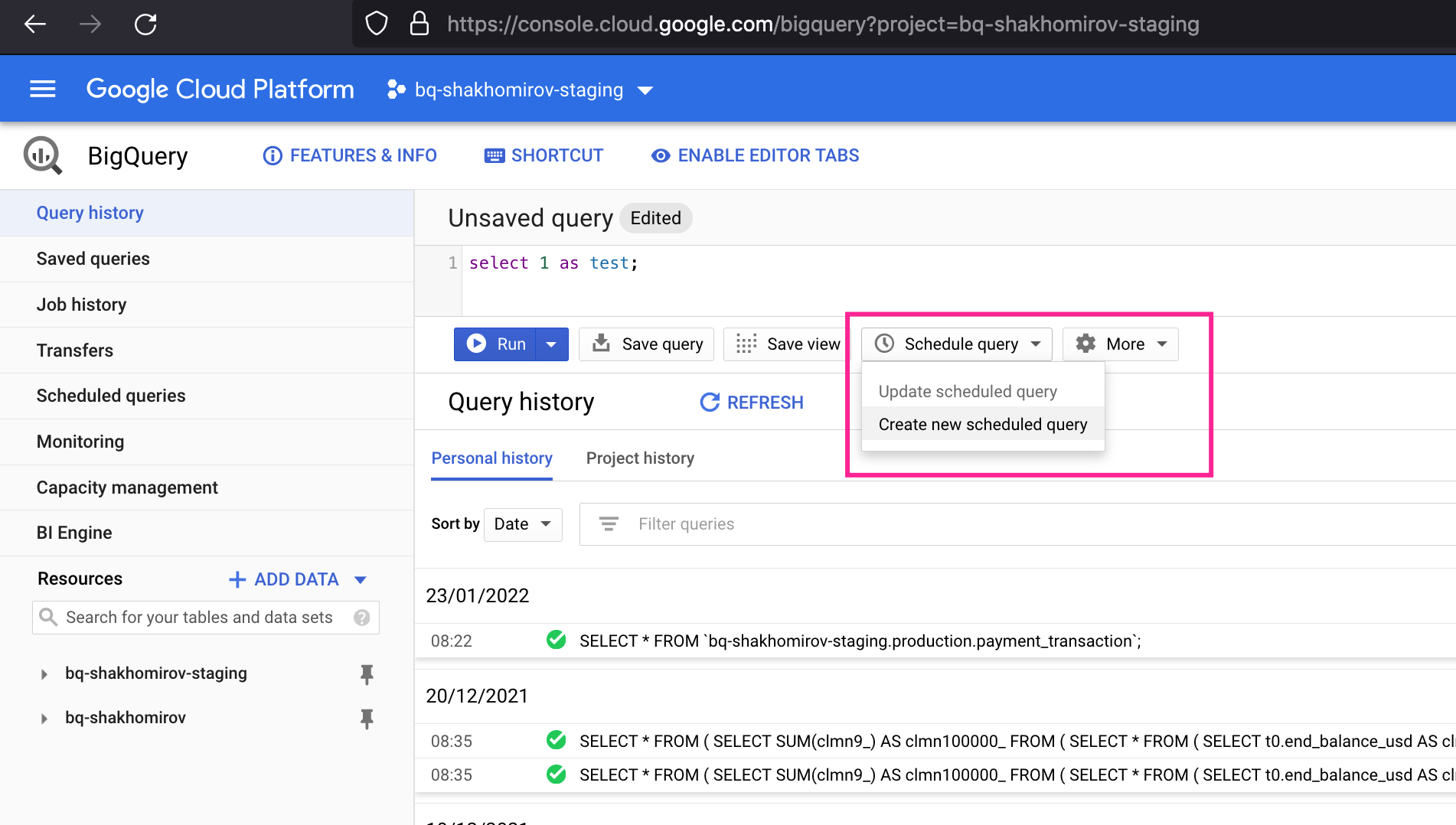

Enter your SQL, i.e. something to test it:

select 1 as test;and click schedule query below:

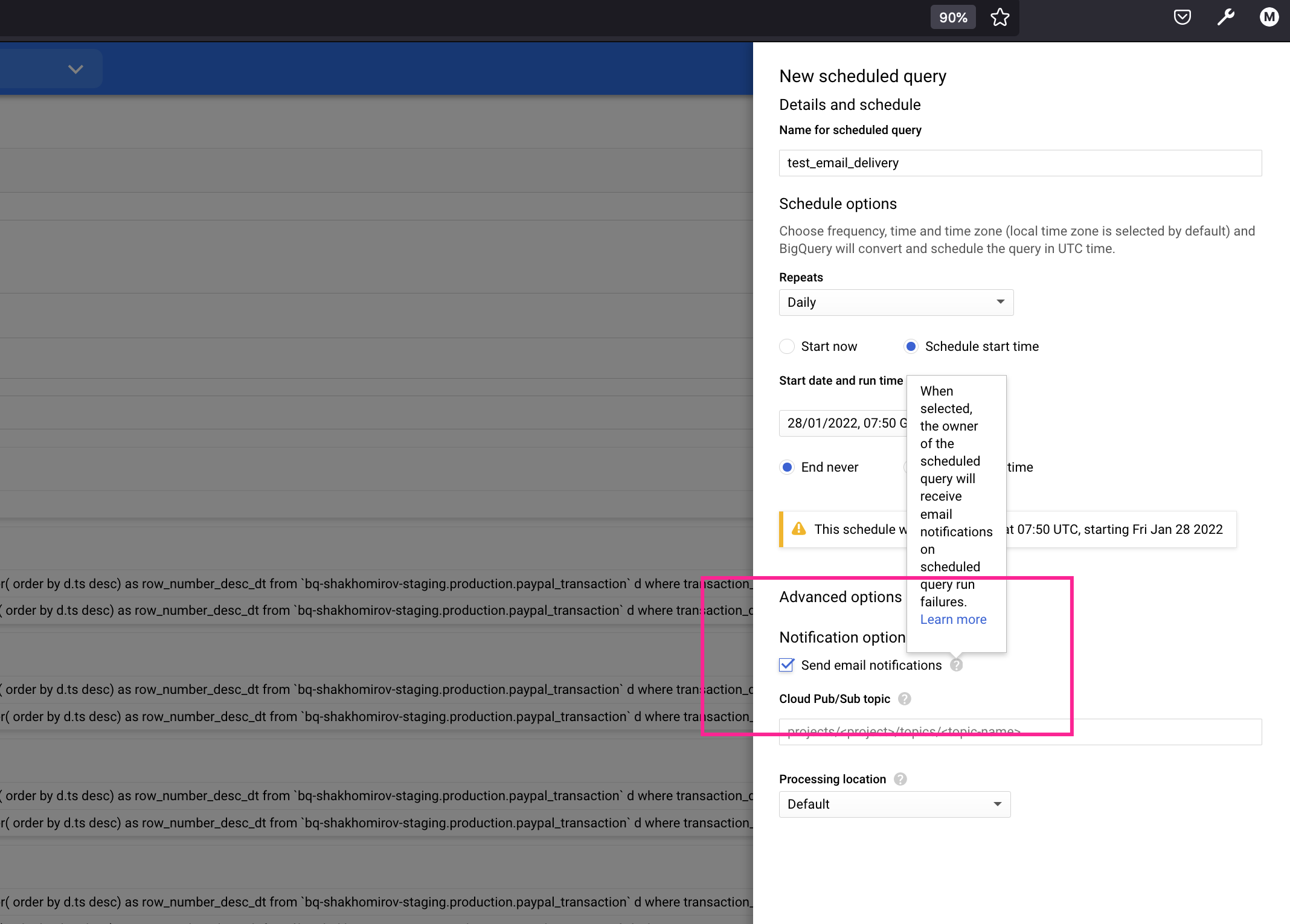

Give your query a name and click "Send email notifications" below. So now you will start receiving notification if your SQL query fails:

All you need now is to raise an exception if any record in your query result meets the row condition you specify.

How to add data checks using row conditions

Basically this is how you test your data meet quality requirements you need.

Let's try imagine some real-life scenario when your data must pass quality assurance process.

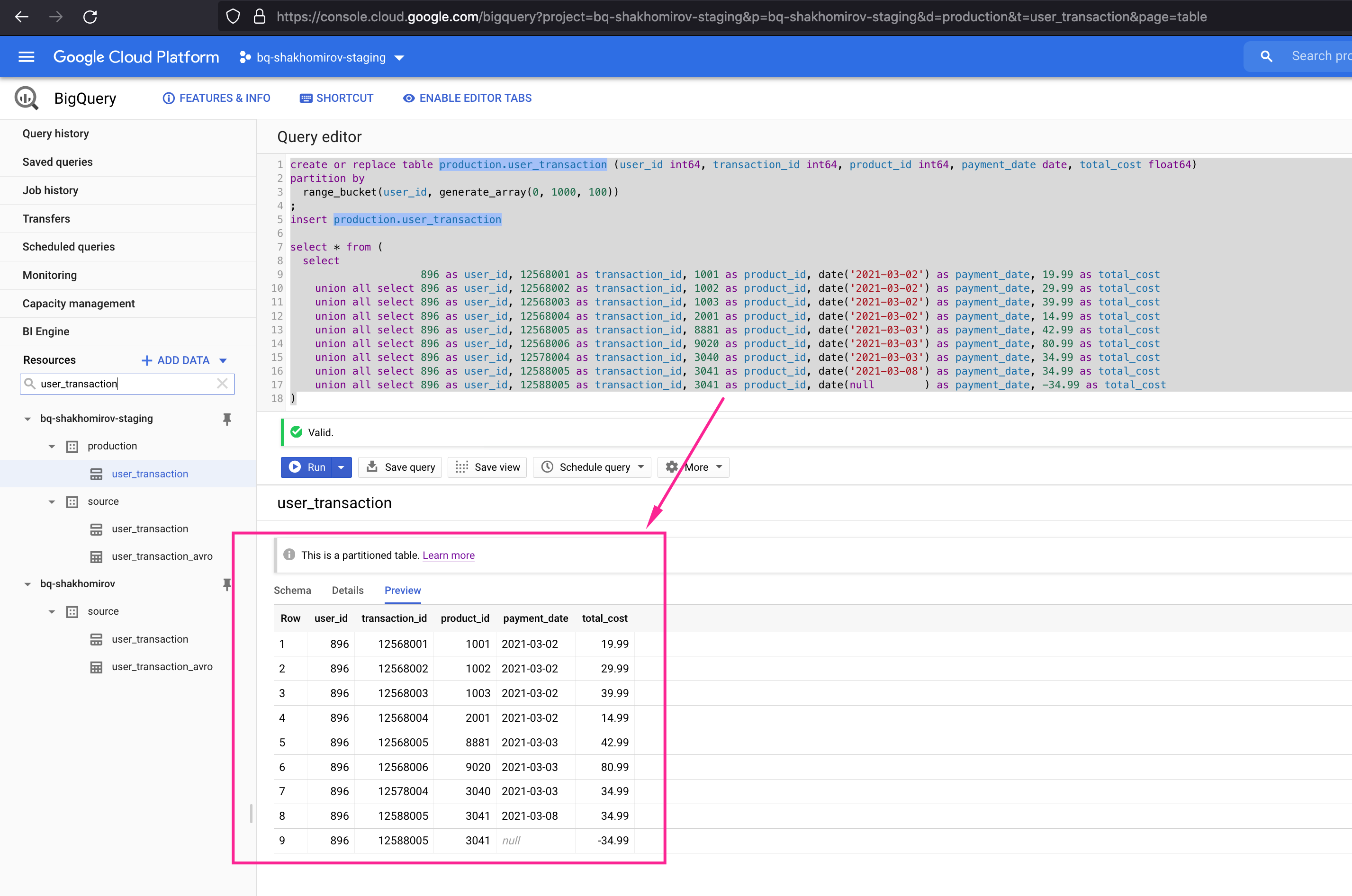

For example, I have a user_transaction table in my staging data warehouse which can be created with this SQL script in yours:

Let's create a table

If you bump into an error like I did:

error: Cannot set write disposition in jobs with DDL statementsjust refresh your page.

Probably some cahced UI elements issue when you web page stays opend for too long.

Read more about creating tables in BigQuery here: https://cloud.google.com/bigquery/docs/creating-partitioned-tables#sql

Outcome

Now I would want to check if there are any potential issues with data

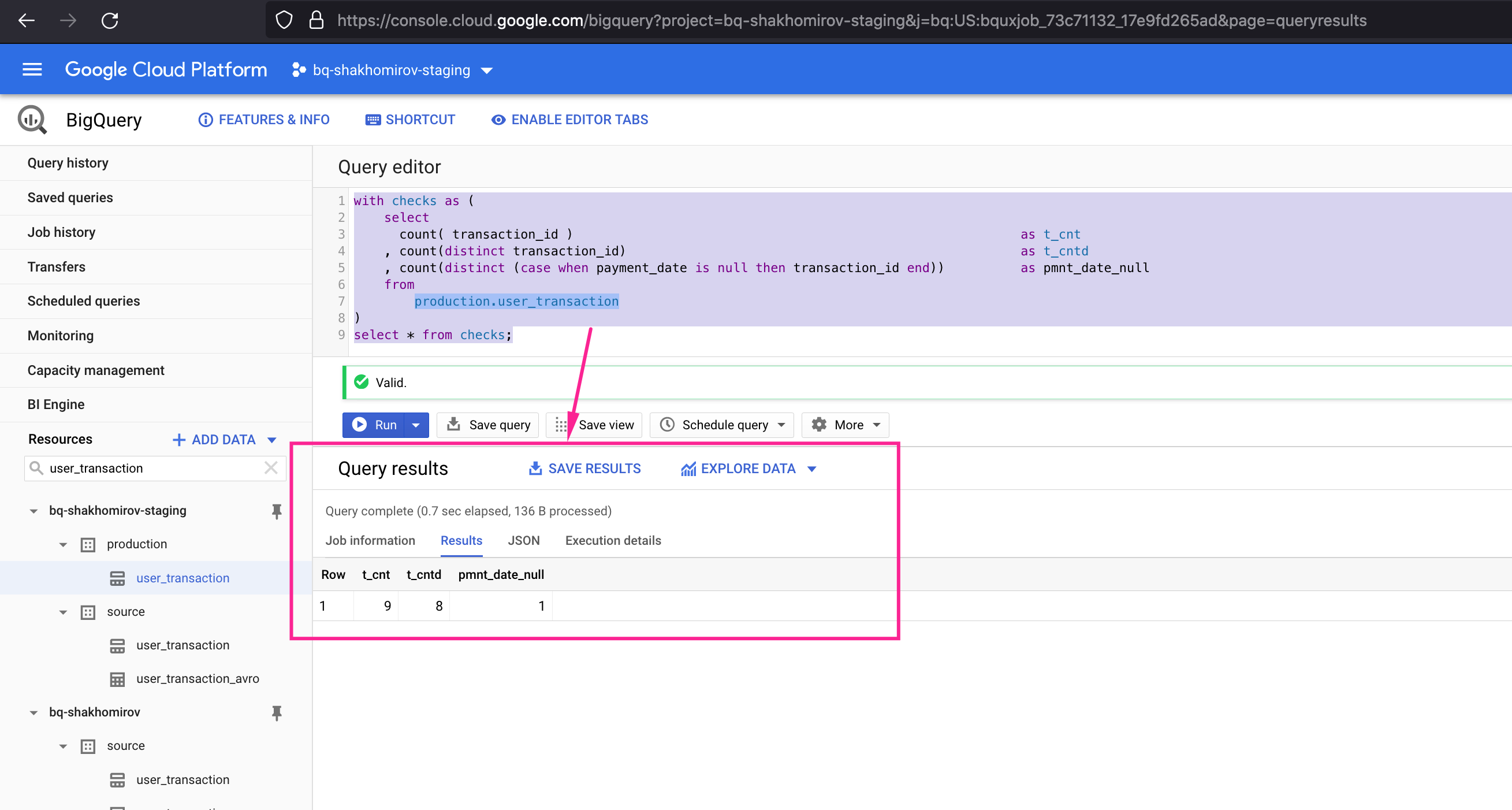

Here I will count records with missing (NULL) values and check if you get any duplicates from source pieline:

As a result you will see that total number of transactions is more than unique transaction count and that there is one payment_date with NULL value:

I would definitely want to get an email notification if that was detected in my data ingested in my data warehouse yesterday.

So how do we do it?

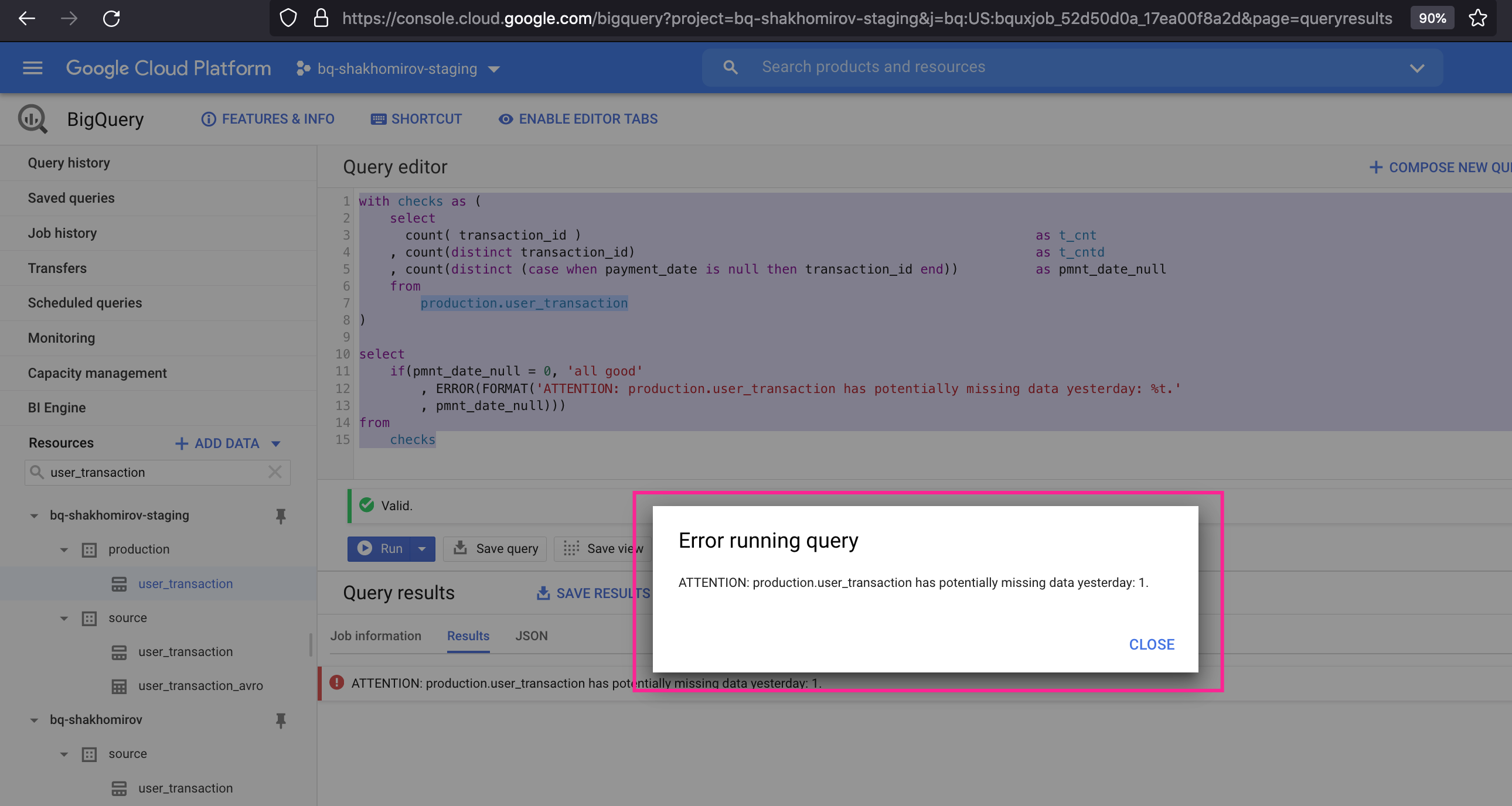

Let's add some more SQL and raise an exception

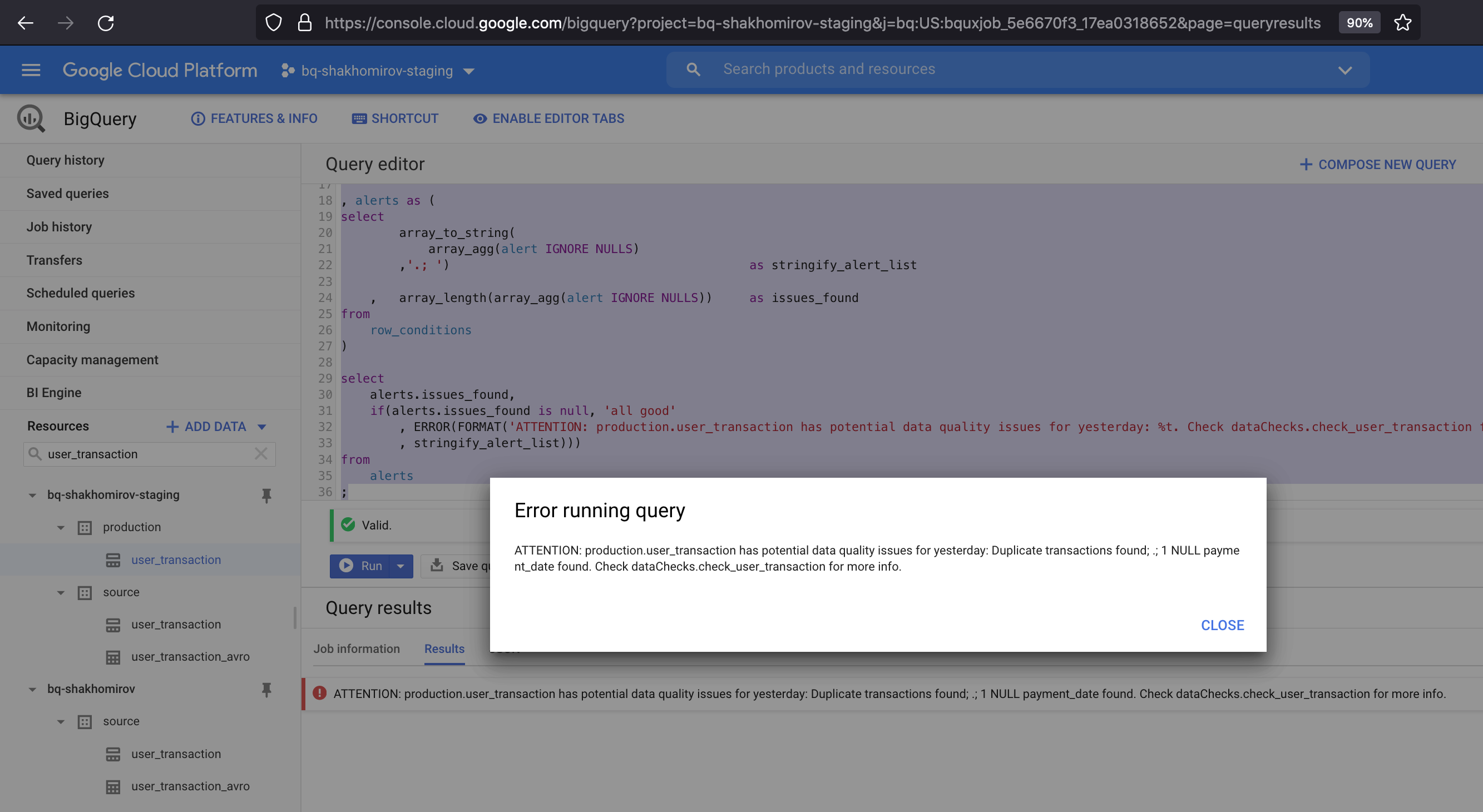

This will trigger an email notification:

Error (BigQuery exception) will be raised:

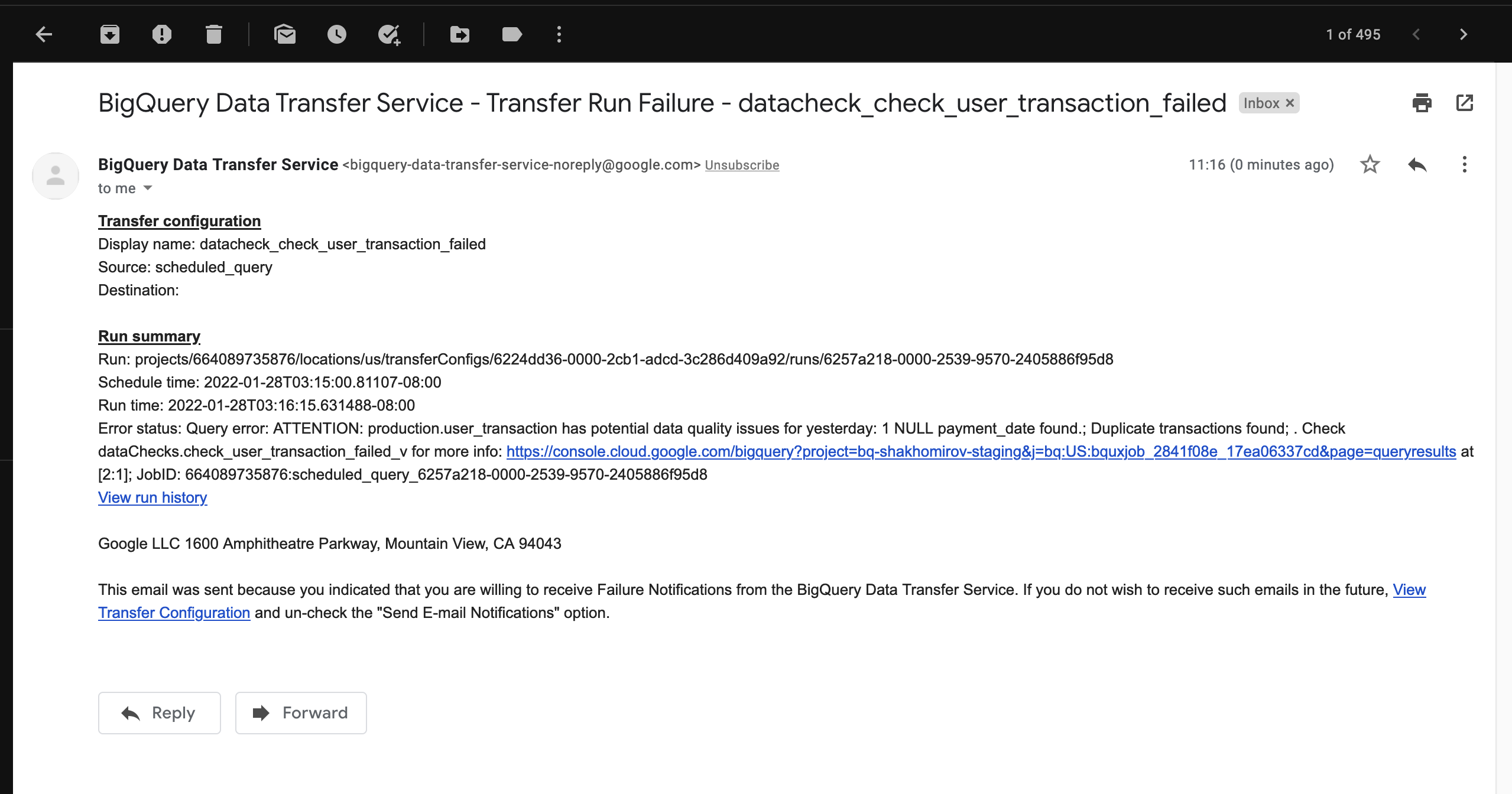

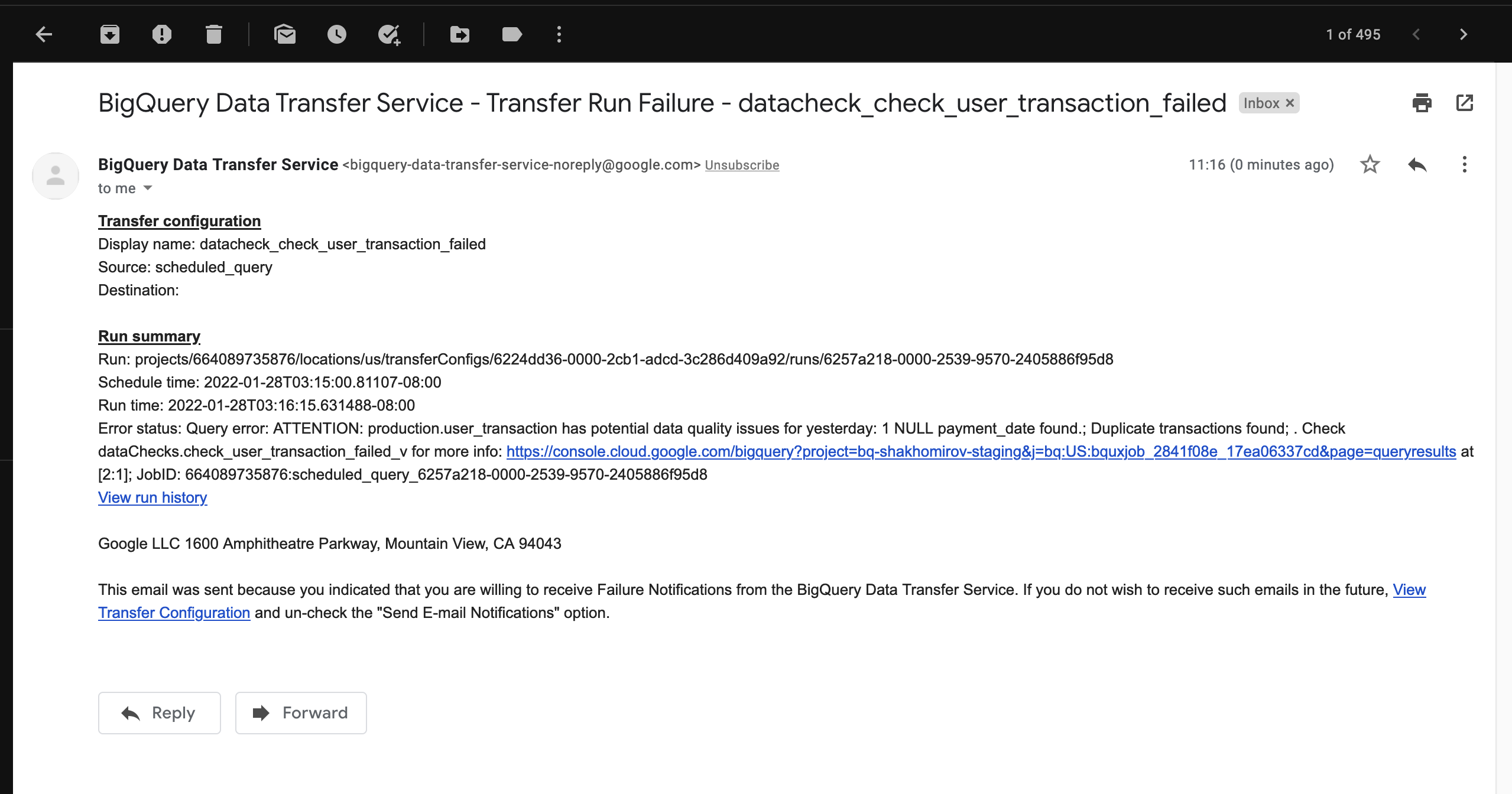

And I will receive an email saying:

ATTENTION: production.user_transaction has potentially missing data yesterday: 1.

It's great. However, I would like to receive a complete summary of potential issues with data in my email.

Let's summarise all potential data quality issues

We could use something like:

This would trigger an email notification when any of our checks failed but wouldn't be very informative saying:

ATTENTION: production.user_transaction has potentiall data quality issues yesterday: %t.

Instead I would want to get something like this:

ATTENTION: production.user_transaction has 1 duplicated transaction and 1 payment_date with NULL value.

Let's see what we need to do to achive this using SQL

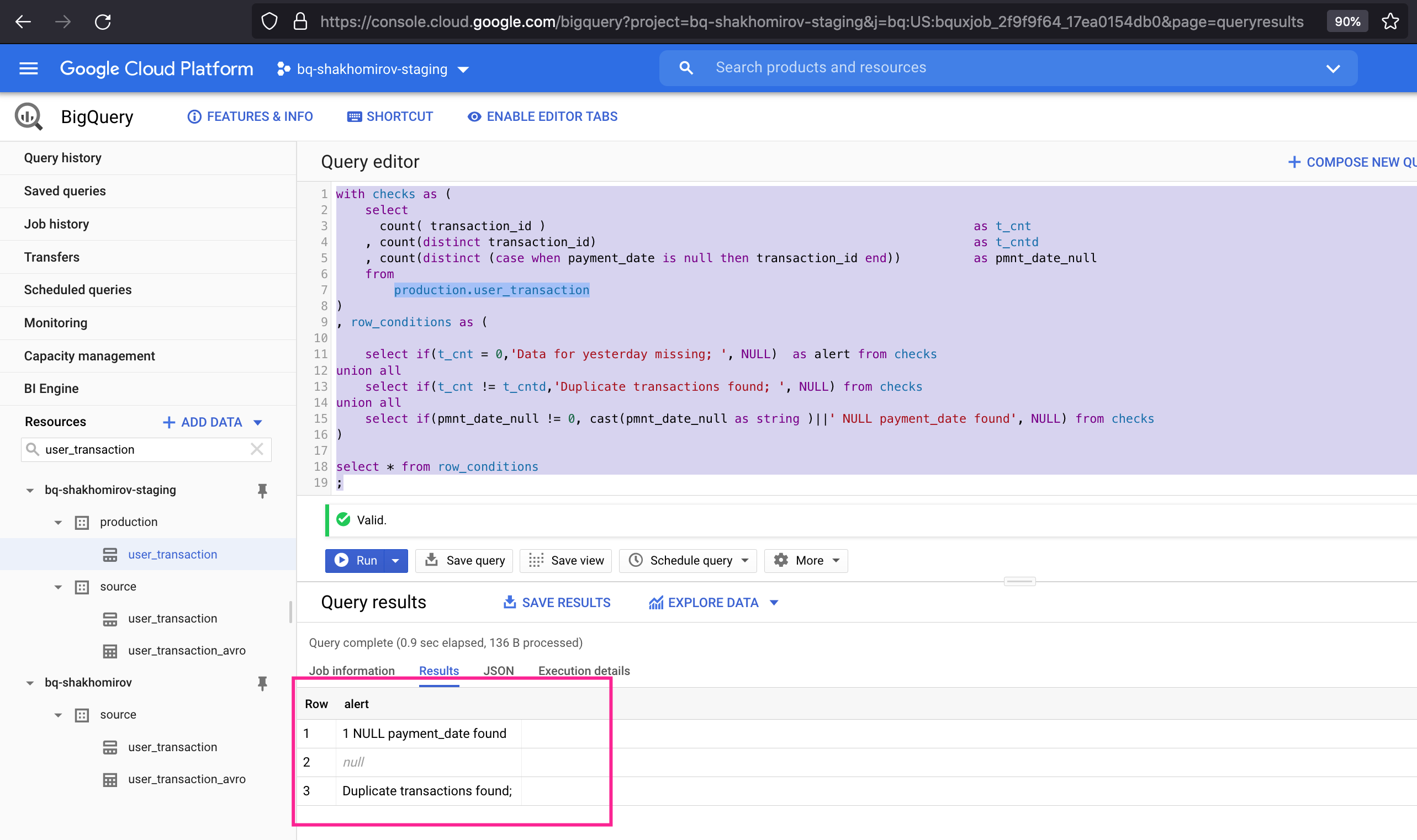

To summarise failed data quality checks you would want to use something like this:

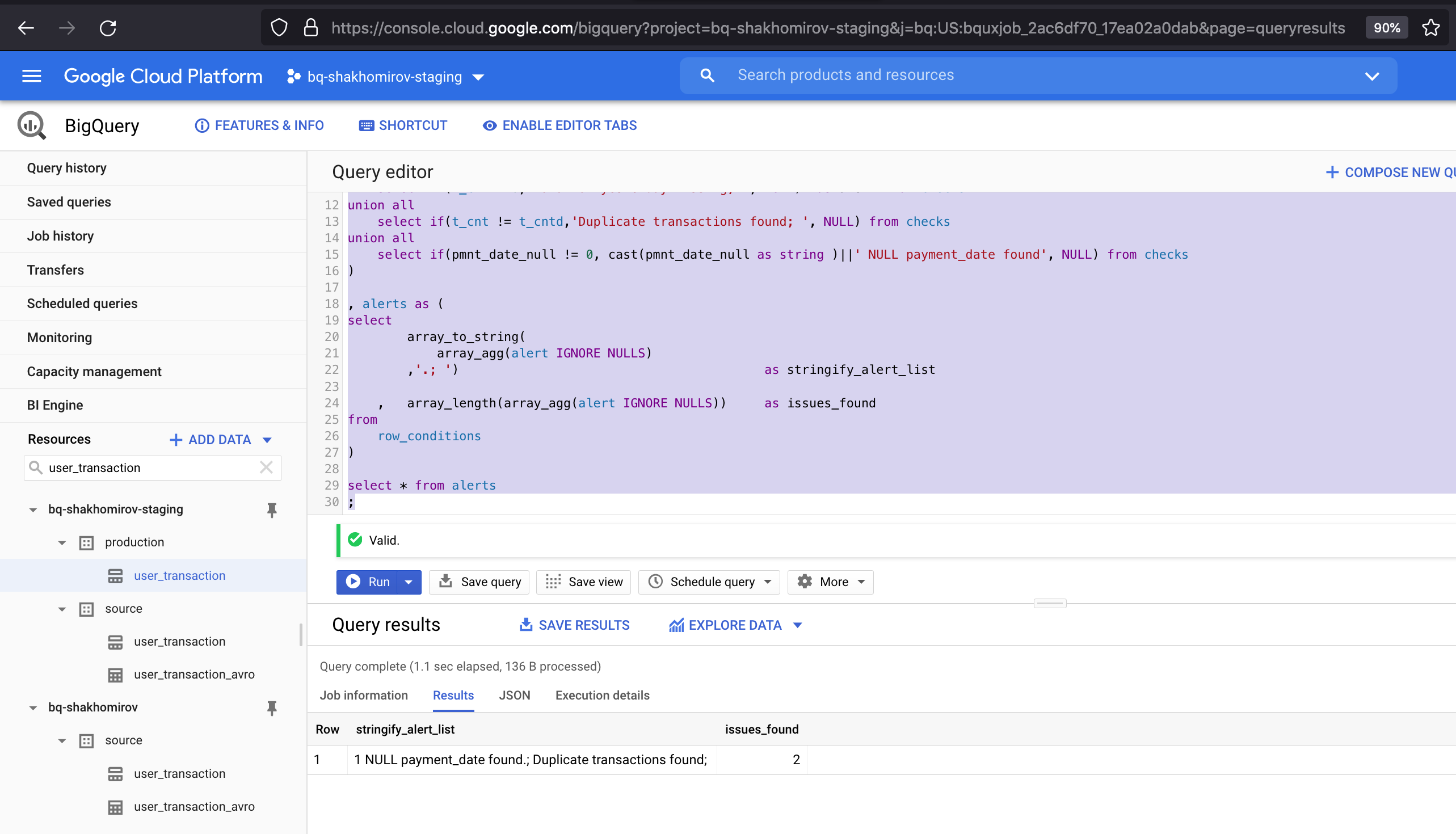

Result:

In that SQL above I've created a CTE with row conditions which define the alerts I need.

I will use it to generate a complete summary of data quality alerts to include in email notification.

There are plenty of ways to achive this using SQL. It's very flexible and that's why I like it but I decided to go with BigQuery array functions https://cloud.google.com/bigquery/docs/reference/standard-sql/array_functions

Let's see how to do it:

As a result you will see summarised text message of potential data quality issues detected:

Finally let's wrap this up with BigQuery exception so it could send us an email:

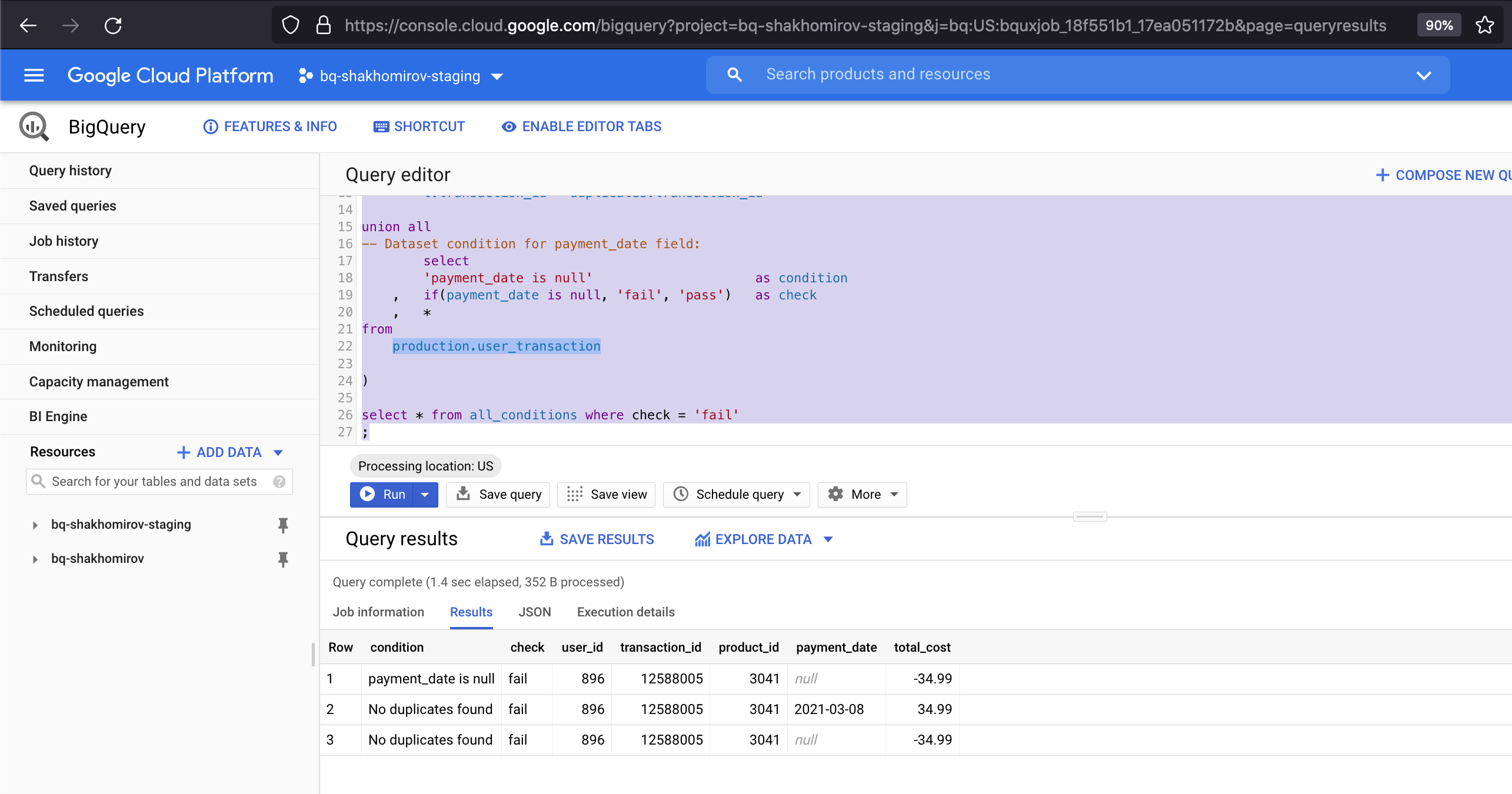

Let's create that dataChecks.check_user_transaction_failed_v view so it is easier to find exact records which failed quality checks

So now when we have this view we can easily investigate data quality issues when we receive a notification.

The usefull thing is that if you can add a view URL link into that BigQuery notification message so Google then infere it as such and you will be able to click it:

Conclusion

This is a simple and reliable data quality framework which most of the modern data warehouses support. Ultimately it allows to check your data with views and detect potential data quality issues with ease. It is not only missing data and NULL values. In dataset conditions almost everything can be used, i.e. use regex function to check data meet particular pattern or any other combined conditions for rows where multiple columns are being used. Need anomaly detection? It's simple. Just add 30 day moving average and a treshold into your dataset conditions and get those email notification when treshold breached.

Resources

- [1]: https://cloud.google.com/bigquery/docs/creating-partitioned-tables#sql

- [2]: https://cloud.google.com/bigquery/docs/reference/standard-sql/array_functions

© Mike Shakhomirov

© Mike Shakhomirov

Comments

Jen Lopez November 13, 2021

Very useful.